ONNX

How to use ONNX Runtime for inference

Installation

pip install optimum["onnxruntime"]Stable Diffusion

Inference

from optimum.onnxruntime import ORTStableDiffusionPipeline

model_id = "runwayml/stable-diffusion-v1-5"

pipeline = ORTStableDiffusionPipeline.from_pretrained(model_id, export=True)

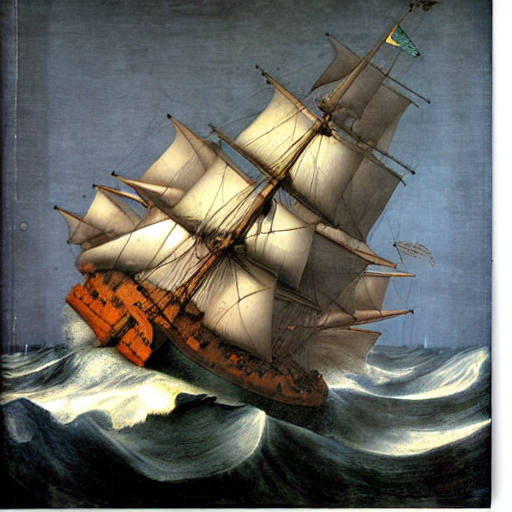

prompt = "sailing ship in storm by Leonardo da Vinci"

image = pipeline(prompt).images[0]

pipeline.save_pretrained("./onnx-stable-diffusion-v1-5")Supported tasks

Task

Loading Class

Stable Diffusion XL

Export

Inference

Supported tasks

Task

Loading Class

Known Issues

Last updated