ConvNeXTV2

ConvNeXt V2

Overview

The ConvNeXt V2 model was proposed in ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders by Sanghyun Woo, Shoubhik Debnath, Ronghang Hu, Xinlei Chen, Zhuang Liu, In So Kweon, Saining Xie. ConvNeXt V2 is a pure convolutional model (ConvNet), inspired by the design of Vision Transformers, and a successor of ConvNeXT.

The abstract from the paper is the following:

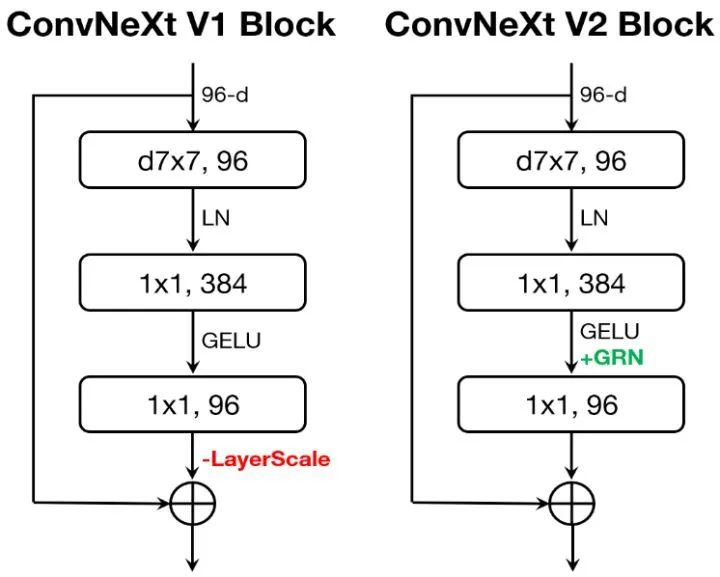

Driven by improved architectures and better representation learning frameworks, the field of visual recognition has enjoyed rapid modernization and performance boost in the early 2020s. For example, modern ConvNets, represented by ConvNeXt, have demonstrated strong performance in various scenarios. While these models were originally designed for supervised learning with ImageNet labels, they can also potentially benefit from self-supervised learning techniques such as masked autoencoders (MAE). However, we found that simply combining these two approaches leads to subpar performance. In this paper, we propose a fully convolutional masked autoencoder framework and a new Global Response Normalization (GRN) layer that can be added to the ConvNeXt architecture to enhance inter-channel feature competition. This co-design of self-supervised learning techniques and architectural improvement results in a new model family called ConvNeXt V2, which significantly improves the performance of pure ConvNets on various recognition benchmarks, including ImageNet classification, COCO detection, and ADE20K segmentation. We also provide pre-trained ConvNeXt V2 models of various sizes, ranging from an efficient 3.7M-parameter Atto model with 76.7% top-1 accuracy on ImageNet, to a 650M Huge model that achieves a state-of-the-art 88.9% accuracy using only public training data.

Tips:

See the code examples below each model regarding usage.

ConvNeXt V2 architecture. Taken from the original paper.

This model was contributed by adirik. The original code can be found here.

Resources

A list of official BOINC AI and community (indicated by 🌎) resources to help you get started with ConvNeXt V2.

Image Classification

ConvNextV2ForImageClassification is supported by this example script and notebook.

If you’re interested in submitting a resource to be included here, please feel free to open a Pull Request and we’ll review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

ConvNextV2Config

class transformers.ConvNextV2Config

( num_channels = 3patch_size = 4num_stages = 4hidden_sizes = Nonedepths = Nonehidden_act = 'gelu'initializer_range = 0.02layer_norm_eps = 1e-12drop_path_rate = 0.0image_size = 224out_features = Noneout_indices = None**kwargs )

Parameters

num_channels (

int, optional, defaults to 3) — The number of input channels.patch_size (

int, optional, defaults to 4) — Patch size to use in the patch embedding layer.num_stages (

int, optional, defaults to 4) — The number of stages in the model.hidden_sizes (

List[int], optional, defaults to[96, 192, 384, 768]) — Dimensionality (hidden size) at each stage.depths (

List[int], optional, defaults to[3, 3, 9, 3]) — Depth (number of blocks) for each stage.hidden_act (

strorfunction, optional, defaults to"gelu") — The non-linear activation function (function or string) in each block. If string,"gelu","relu","selu"and"gelu_new"are supported.initializer_range (

float, optional, defaults to 0.02) — The standard deviation of the truncated_normal_initializer for initializing all weight matrices.layer_norm_eps (

float, optional, defaults to 1e-12) — The epsilon used by the layer normalization layers.drop_path_rate (

float, optional, defaults to 0.0) — The drop rate for stochastic depth.out_features (

List[str], optional) — If used as backbone, list of features to output. Can be any of"stem","stage1","stage2", etc. (depending on how many stages the model has). If unset andout_indicesis set, will default to the corresponding stages. If unset andout_indicesis unset, will default to the last stage.out_indices (

List[int], optional) — If used as backbone, list of indices of features to output. Can be any of 0, 1, 2, etc. (depending on how many stages the model has). If unset andout_featuresis set, will default to the corresponding stages. If unset andout_featuresis unset, will default to the last stage.

This is the configuration class to store the configuration of a ConvNextV2Model. It is used to instantiate an ConvNeXTV2 model according to the specified arguments, defining the model architecture. Instantiating a configuration with the defaults will yield a similar configuration to that of the ConvNeXTV2 facebook/convnextv2-tiny-1k-224 architecture.

Configuration objects inherit from PretrainedConfig and can be used to control the model outputs. Read the documentation from PretrainedConfig for more information.

Example:

Copied

ConvNextV2Model

class transformers.ConvNextV2Model

( config )

Parameters

config (ConvNextV2Config) — Model configuration class with all the parameters of the model. Initializing with a config file does not load the weights associated with the model, only the configuration. Check out the from_pretrained() method to load the model weights.

The bare ConvNextV2 model outputting raw features without any specific head on top. This model is a PyTorch torch.nn.Module subclass. Use it as a regular PyTorch Module and refer to the PyTorch documentation for all matter related to general usage and behavior.

forward

( pixel_values: FloatTensor = Noneoutput_hidden_states: typing.Optional[bool] = Nonereturn_dict: typing.Optional[bool] = None ) → transformers.modeling_outputs.BaseModelOutputWithPoolingAndNoAttention or tuple(torch.FloatTensor)

Parameters

pixel_values (

torch.FloatTensorof shape(batch_size, num_channels, height, width)) — Pixel values. Pixel values can be obtained using ConvNextImageProcessor. See ConvNextImageProcessor.call() for details.output_hidden_states (

bool, optional) — Whether or not to return the hidden states of all layers. Seehidden_statesunder returned tensors for more detail.return_dict (

bool, optional) — Whether or not to return a ModelOutput instead of a plain tuple.

Returns

transformers.modeling_outputs.BaseModelOutputWithPoolingAndNoAttention or tuple(torch.FloatTensor)

A transformers.modeling_outputs.BaseModelOutputWithPoolingAndNoAttention or a tuple of torch.FloatTensor (if return_dict=False is passed or when config.return_dict=False) comprising various elements depending on the configuration (ConvNextV2Config) and inputs.

last_hidden_state (

torch.FloatTensorof shape(batch_size, num_channels, height, width)) — Sequence of hidden-states at the output of the last layer of the model.pooler_output (

torch.FloatTensorof shape(batch_size, hidden_size)) — Last layer hidden-state after a pooling operation on the spatial dimensions.hidden_states (

tuple(torch.FloatTensor), optional, returned whenoutput_hidden_states=Trueis passed or whenconfig.output_hidden_states=True) — Tuple oftorch.FloatTensor(one for the output of the embeddings, if the model has an embedding layer, + one for the output of each layer) of shape(batch_size, num_channels, height, width).Hidden-states of the model at the output of each layer plus the optional initial embedding outputs.

The ConvNextV2Model forward method, overrides the __call__ special method.

Although the recipe for forward pass needs to be defined within this function, one should call the Module instance afterwards instead of this since the former takes care of running the pre and post processing steps while the latter silently ignores them.

Example:

Copied

ConvNextV2ForImageClassification

class transformers.ConvNextV2ForImageClassification

( config )

Parameters

config (ConvNextV2Config) — Model configuration class with all the parameters of the model. Initializing with a config file does not load the weights associated with the model, only the configuration. Check out the from_pretrained() method to load the model weights.

ConvNextV2 Model with an image classification head on top (a linear layer on top of the pooled features), e.g. for ImageNet.

This model is a PyTorch torch.nn.Module subclass. Use it as a regular PyTorch Module and refer to the PyTorch documentation for all matter related to general usage and behavior.

forward

( pixel_values: FloatTensor = Nonelabels: typing.Optional[torch.LongTensor] = Noneoutput_hidden_states: typing.Optional[bool] = Nonereturn_dict: typing.Optional[bool] = None ) → transformers.modeling_outputs.ImageClassifierOutputWithNoAttention or tuple(torch.FloatTensor)

Parameters

pixel_values (

torch.FloatTensorof shape(batch_size, num_channels, height, width)) — Pixel values. Pixel values can be obtained using ConvNextImageProcessor. See ConvNextImageProcessor.call() for details.output_hidden_states (

bool, optional) — Whether or not to return the hidden states of all layers. Seehidden_statesunder returned tensors for more detail.return_dict (

bool, optional) — Whether or not to return a ModelOutput instead of a plain tuple.labels (

torch.LongTensorof shape(batch_size,), optional) — Labels for computing the image classification/regression loss. Indices should be in[0, ..., config.num_labels - 1]. Ifconfig.num_labels == 1a regression loss is computed (Mean-Square loss), Ifconfig.num_labels > 1a classification loss is computed (Cross-Entropy).

Returns

transformers.modeling_outputs.ImageClassifierOutputWithNoAttention or tuple(torch.FloatTensor)

A transformers.modeling_outputs.ImageClassifierOutputWithNoAttention or a tuple of torch.FloatTensor (if return_dict=False is passed or when config.return_dict=False) comprising various elements depending on the configuration (ConvNextV2Config) and inputs.

loss (

torch.FloatTensorof shape(1,), optional, returned whenlabelsis provided) — Classification (or regression if config.num_labels==1) loss.logits (

torch.FloatTensorof shape(batch_size, config.num_labels)) — Classification (or regression if config.num_labels==1) scores (before SoftMax).hidden_states (

tuple(torch.FloatTensor), optional, returned whenoutput_hidden_states=Trueis passed or whenconfig.output_hidden_states=True) — Tuple oftorch.FloatTensor(one for the output of the embeddings, if the model has an embedding layer, + one for the output of each stage) of shape(batch_size, num_channels, height, width). Hidden-states (also called feature maps) of the model at the output of each stage.

The ConvNextV2ForImageClassification forward method, overrides the __call__ special method.

Although the recipe for forward pass needs to be defined within this function, one should call the Module instance afterwards instead of this since the former takes care of running the pre and post processing steps while the latter silently ignores them.

Example:

Copied

Last updated