🌍Model Widgets

Widgets

What’s a widget?

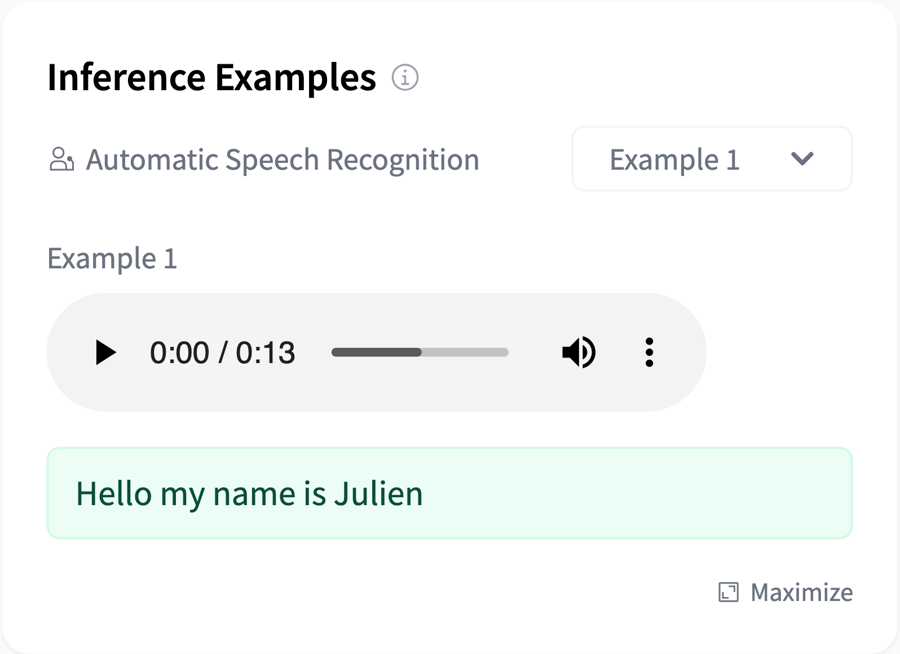

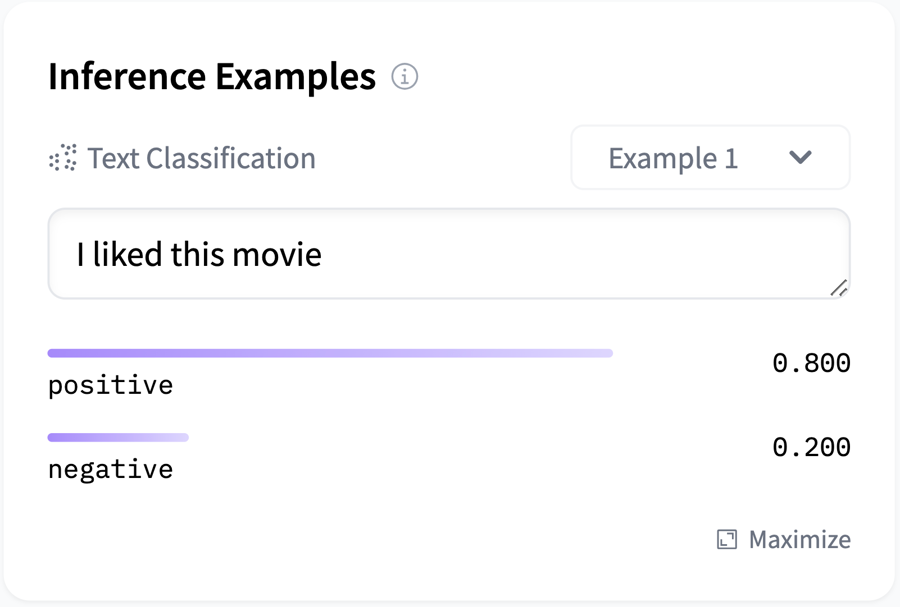

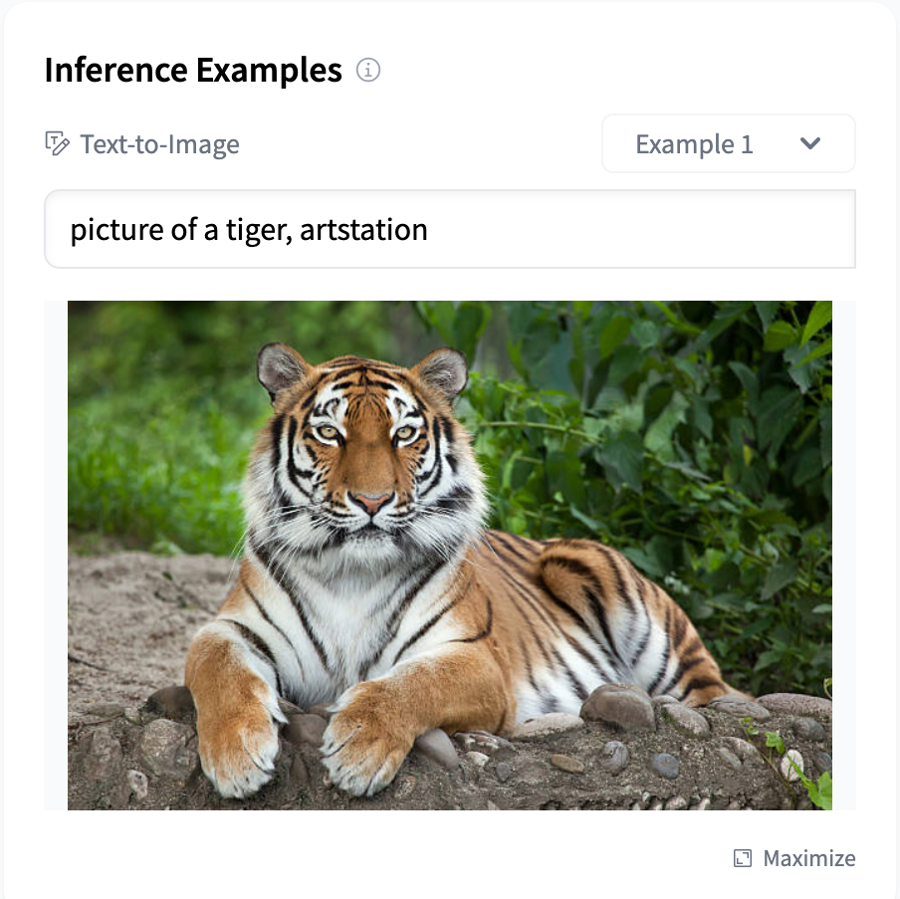

Many model repos have a widget that allows anyone to run inferences directly in the browser!

Here are some examples:

Named Entity Recognition using spaCy.

Image Classification using BOINC AI Transformers

Text to Speech using ESPnet.

You can try out all the widgets here.

Enabling a widget

A widget is automatically created for your model when you upload it to the Hub. To determine which pipeline and widget to display (text-classification, token-classification, translation, etc.), we analyze information in the repo, such as the metadata provided in the model card and configuration files. This information is mapped to a single pipeline_tag. We choose to expose only one widget per model for simplicity.

For most use cases, we determine the model type from the tags. For example, if there is tag: text-classification in the model card metadata, the inferred pipeline_tag will be text-classification.

For some libraries, such as 🤗 Transformers, the model type should be inferred automatically based from configuration files (config.json). The architecture can determine the type: for example, AutoModelForTokenClassification corresponds to token-classification. If you’re interested in this, you can see pseudo-code in this gist.

You can always manually override your pipeline type with pipeline_tag: xxx in your model card metadata. (You can also use the metadata GUI editor to do this).

How can I control my model’s widget example input?

You can specify the widget input in the model card metadata section:

Copied

You can provide more than one example input. In the examples dropdown menu of the widget, they will appear as Example 1, Example 2, etc. Optionally, you can supply example_title as well.

Copied

Copied

Moreover, you can specify non-text example inputs in the model card metadata. Refer here for a complete list of sample input formats for all widget types. For vision & audio widget types, provide example inputs with src rather than text.

For example, allow users to choose from two sample audio files for automatic speech recognition tasks by:

Copied

Note that you can also include example files in your model repository and use them as:

Copied

But even more convenient, if the file lives in the corresponding model repo, you can just use the filename or file path inside the repo:

Copied

or if it was nested inside the repo:

Copied

We provide example inputs for some languages and most widget types in the DefaultWidget.ts file. If some examples are missing, we welcome PRs from the community to add them!

Example outputs

As an extension to example inputs, for each widget example, you can also optionally describe the corresponding model output, directly in the output property.

This is useful when the model is not yet supported by the Inference API (for instance, the model library is not yet supported or the model is too large) so that the model page can still showcase how the model works and what results it gives.

For instance, for an automatic-speech-recognition model:

Copied

The output property should be a YAML dictionary that represents the Inference API output.

For a model that outputs text, see the example above.

For a model that outputs labels (like a text-classification model for instance), output should look like this:

Copied

Finally, for a model that outputs an image, audio, or any other kind of asset, the output should include a url property linking to either a file name or path inside the repo or a remote URL. For example, for a text-to-image model:

Copied

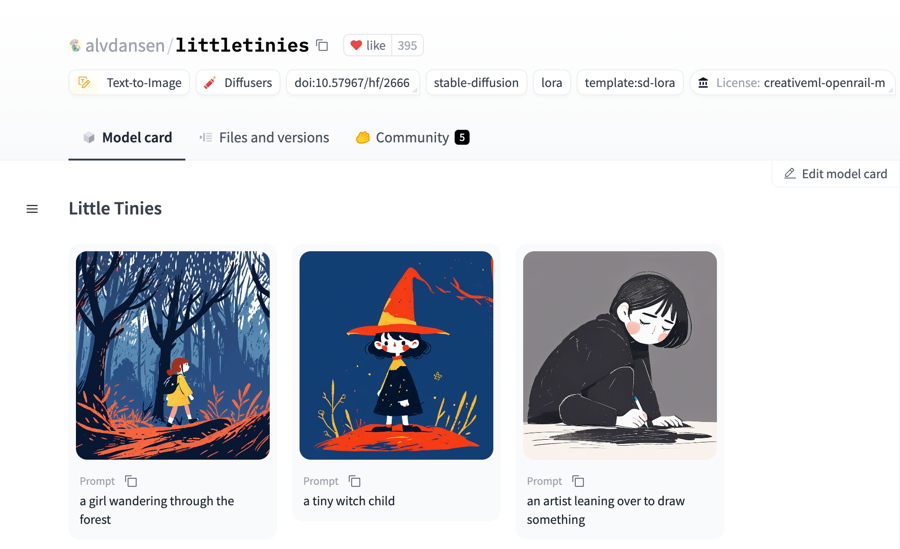

We can also surface the example outputs in the BOINC AI UI, for instance, for a text-to-image model to display a gallery of cool image generations.

What are all the possible task/widget types?

You can find all the supported tasks here.

Here are some links to examples:

text-classification, for instanceroberta-large-mnlitoken-classification, for instancedbmdz/bert-large-cased-finetuned-conll03-englishquestion-answering, for instancedistilbert-base-uncased-distilled-squadtranslation, for instancet5-basesummarization, for instancefacebook/bart-large-cnnconversational, for instancefacebook/blenderbot-400M-distilltext-generation, for instancegpt2fill-mask, for instancedistilroberta-basezero-shot-classification(implemented on top of a nlitext-classificationmodel), for instancefacebook/bart-large-mnlitable-question-answering, for instancegoogle/tapas-base-finetuned-wtqsentence-similarity, for instanceosanseviero/full-sentence-distillroberta2

How can I control my model’s widget Inference API parameters?

Generally, the Inference API for a model uses the default pipeline settings associated with each task. But if you’d like to change the pipeline’s default settings and specify additional inference parameters, you can configure the parameters directly through the model card metadata. Refer here for some of the most commonly used parameters associated with each task.

For example, if you want to specify an aggregation strategy for a NER task in the widget:

Copied

Or if you’d like to change the temperature for a summarization task in the widget:

Copied

The Inference API allows you to send HTTP requests to models in the Hugging Face Hub, and it’s 2x to 10x faster than the widgets! ⚡⚡ Learn more about it by reading the Inference API documentation. Finally, you can also deploy all those models to dedicated Inference Endpoints.

Last updated