Neuron models for inference

Neuron Model Inference

The APIs presented in the following documentation are relevant for the inference on inf2, trn1 and inf1.

NeuronModelForXXX classes help to load models from the BOINC AI Hub and compile them to a serialized format optimized for neuron devices. You will then be able to load the model and run inference with the acceleration powered by AWS Neuron devices.

Switching from Transformers to Optimum

The optimum.neuron.NeuronModelForXXX model classes are APIs compatible with BOINC AI Transformers models. This means seamless integration with BOINC AI’s ecosystem. You can just replace your AutoModelForXXX class with the corresponding NeuronModelForXXX class in optimum.neuron.

If you already use Transformers, you will be able to reuse your code just by replacing model classes:

Copied

from transformers import AutoTokenizer

-from transformers import AutoModelForSequenceClassification

+from optimum.neuron import NeuronModelForSequenceClassification

# PyTorch checkpoint

-model = AutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased-finetuned-sst-2-english")

+model = NeuronModelForSequenceClassification.from_pretrained("distilbert-base-uncased-finetuned-sst-2-english",

+ export=True, **neuron_kwargs)As shown above, when you use NeuronModelForXXX for the first time, you will need to set export=True to compile your model from PyTorch to a neuron-compatible format.

You will also need to pass Neuron specific parameters to configure the export. Each model architecture has its own set of parameters, as detailed in the next paragraphs.

Once your model has been exported, you can save it either on your local or in the BOINC AI Model Hub:

Copied

And the next time when you want to run inference, just load your compiled model which will save you the compilation time:

Copied

As you see, there is no need to pass the neuron arguments used during the export as they are saved in a config.json file, and will be restored automatically by NeuronModelForXXX class.

When running inference for the first time, there is a warmup phase when you run the pipeline for the first time. This run would take 3x-4x higher latency than a regular run.

Discriminative NLP models

As explained in the previous section, you will need only few modifications to your Transformers code to export and run NLP models:

Copied

compiler_args are optional arguments for the compiler, these arguments usually control how the compiler makes tradeoff between the inference performance (latency and throughput) and the accuracy. Here we cast FP32 operations to BF16 using the Neuron matrix-multiplication engine.

input_shapes are mandatory static shape information that you need to send to the neuron compiler. Wondering what shapes are mandatory for your model? Check it out with the following code:

Copied

Be careful, the input shapes used for compilation should be inferior than the size of inputs that you will feed into the model during the inference.

What if input sizes are smaller than compilation input shapes?

No worries, NeuronModelForXXX class will pad your inputs to an eligible shape. Besides you can set dynamic_batch_size=True in the from_pretrained method to enable dynamic batching, which means that your inputs can have variable batch size.

(Just keep in mind: dynamicity and padding comes with not only flexibility but also performance drop. Fair enough!)

Generative NLP models

As explained before, you will need only a few modifications to your Transformers code to export and run NLP models:

Configuring the export of a generative model

As for non-generative models, two sets of parameters can be passed to the from_pretrained() method to configure how a transformers checkpoint is exported to a neuron optimized model:

compiler_args = { num_cores, auto_cast_type }are optional arguments for the compiler, these arguments usually control how the compiler makes tradeoff between the inference latency and throughput and the accuracy.input_shapes = { batch_size, sequence_length }correspond to the static shape of the model input and the KV-cache (attention keys and values for past tokens).num_coresis the number of neuron cores used when instantiating the model. Each neuron core has 16 Gb of memory, which means that bigger models need to be split on multiple cores. Defaults to 1,auto_cast_typespecifies the format to encode the weights. It can be one offp32(float32),fp16(float16) orbf16(bfloat16). Defaults tofp32.batch_sizeis the number of input sequences that the model will accept. Defaults to 1,sequence_lengthis the maximum number of tokens in an input sequence. Defaults tomax_position_embeddings(n_positionsfor older models).

Copied

As explained before, these parameters can only be configured during export. This means in particular that during inference:

the

batch_sizeof the inputs should be equal to thebatch_sizeused during export,the

lengthof the input sequences should be lower than thesequence_lengthused during export,the maximum number of tokens (input + generated) cannot exceed the

sequence_lengthused during export.

Text generation inference

As with the original transformers models, use generate() instead of forward() to generate text sequences.

Copied

The generation is highly configurable. Please refer to https://boincai.com/docs/transformers/generation_strategies for details.

Please be aware that:

for each model architecture, default values are provided for all parameters, but values passed to the

generatemethod will take precedence,the generation parameters can be stored in a

generation_config.jsonfile. When such a file is present in model directory, it will be parsed to set the default parameters (the values passed to thegeneratemethod still take precedence).

Stable Diffusion

Optimum extends 🌍Diffusers to support inference on Neuron. To get started, make sure you have installed Diffusers:

Copied

You can also accelerate the inference of stable diffusion on neuronx devices (inf2 / trn1). There are four components which need to be exported to the .neuron format to boost the performance:

Text encoder

U-Net

VAE encoder

VAE decoder

Text-to-Image

NeuronStableDiffusionPipeline class allows you to generate images from a text prompt on neuron devices similar to the experience with diffusers.

Like for other tasks, you need to compile models before being able to perform inference. The export can be done either via the CLI or via NeuronStableDiffusionPipeline API. Here is an example of exporting stable diffusion components with NeuronStableDiffusionPipeline:

To apply optimized compute of Unet’s attention score, please configure your environment variable with export NEURON_FUSE_SOFTMAX=1.

Besides, don’t hesitate to tweak the compilation configuration to find the best tradeoff between performance v.s accuracy in your use case. By default, we suggest casting FP32 matrix multiplication operations to BF16 which offers good performance with moderate sacrifice of the accuracy. Check out the guide from AWS Neuron documentation to better understand the options for your compilation.

Copied

Now generate an image with a prompt on neuron:

Copied

Image-to-Image

With the NeuronStableDiffusionImg2ImgPipeline class, you can generate a new image conditioned on a text prompt and an initial image.

Copied

image

prompt

output

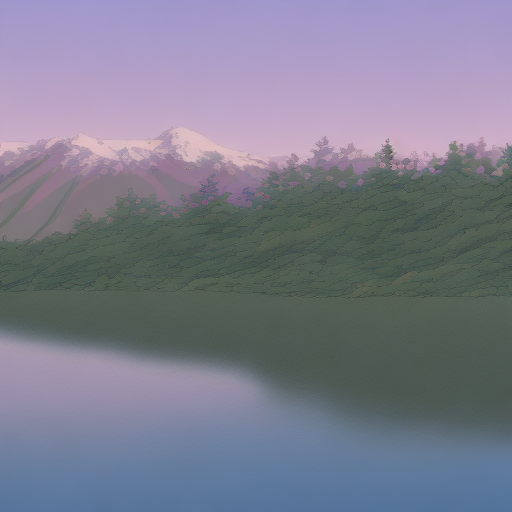

ghibli style, a fantasy landscape with snowcapped mountains, trees, lake with detailed reflection. warm colors, 8K

Inpaint

With the NeuronStableDiffusionInpaintPipeline class, you can edit specific parts of an image by providing a mask and a text prompt.

Copied

image

mask_image

prompt

output

Face of a yellow cat, high resolution, sitting on a park bench

Stable Diffusion XL

Text-to-Image

Similar to Stable Diffusion, you will be able to use NeuronStableDiffusionXLPipeline API to export and run inference on Neuron devices with SDXL models.

Copied

Now generate an image with a text prompt on neuron:

Copied

Image-to-Image

With NeuronStableDiffusionXLImg2ImgPipeline, you can pass an initial image, and a text prompt to condition generated images:

Copied

image

prompt

output

a dog running, lake, moat

Inpaint

With NeuronStableDiffusionXLInpaintPipeline, pass the original image and a mask of what you want to replace in the original image. Then replace the masked area with content described in a prompt.

Copied

image

mask_image

prompt

output

A deep sea diver floating

Refine Image Quality

SDXL includes a refiner model to denoise low-noise stage images generated from the base model. There are two ways to use the refiner:

use the base and refiner model together to produce a refined image.

use the base model to produce an image, and subsequently use the refiner model to add more details to the image.

Base + refiner model

Copied

Base to refiner model

Copied

Base Image

Refined Image

To avoid Neuron device out of memory, it’s suggested to finish all base inference and release the device memory before running the refiner.

Happy inference with Neuron! 🚀

Last updated