Export a model to Inferentia

Export a model to Inferentia

Summary

Exporting a PyTorch model to Neuron model is as simple as

Copied

optimum-cli export neuron \

--model bert-base-uncased \

--sequence_length 128 \

--batch_size 1 \

bert_neuron/ Check out the help for more options:

Copied

optimum-cli export neuron --helpWhy compile to Neuron model?

AWS provides two generations of the Inferentia accelerator built for machine learning inference with higher throughput, lower latency but lower cost: inf2 (NeuronCore-v2) and inf1 (NeuronCore-v1).

In production environments, to deploy 🤗 Transformers models on Neuron devices, you need to compile your models and export them to a serialized format before inference. Through Ahead-Of-Time (AOT) compilation with Neuron Compiler( neuronx-cc or neuron-cc ), your models will be converted to serialized and optimized TorchScript modules.

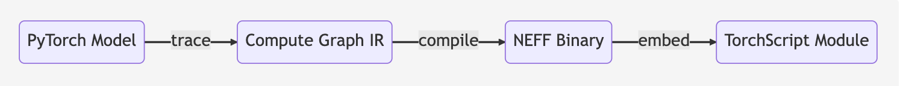

To understand a little bit more about the compilation, here are general steps executed under the hood:

NEFF: Neuron Executable File Format which is a binary executable on Neuron devices.

Although pre-compilation avoids overhead during the inference, traced Neuron module has some limitations:

Traced Neuron module will be static, which requires fixed input shapes and data types used passed during the compilation. As the model won’t be dynamically recompiled, the inference will fail if any of the above conditions change. (But these limitations could be bypass with dynamic batching and bucketing).

Neuron models are hardware-specialized, which means:

Models traced with Neuron can no longer be executed in non-Neuron environment.

Models compiled for inf1 (NeuronCore-v1) are not compatible with inf2 (NeuronCore-v2), and vice versa.

In this guide, we’ll show you how to export your models to serialized models optimized for Neuron devices.

🤗 Optimum provides support for the Neuron export by leveraging configuration objects. These configuration objects come ready made for a number of model architectures, and are designed to be easily extendable to other architectures.

To check the supported architectures, go to the configuration reference page.

Exporting a model to Neuron using the CLI

To export a 🤗 Transformers model to Neuron, you’ll first need to install some extra dependencies:

For Inf2

Copied

For Inf1

Copied

The Optimum Neuron export can be used through Optimum command-line:

Copied

In the last section, you can see some input shape options to pass for exporting static neuron model, meaning that exact shape inputs should be used during the inference as given during compilation. If you are going to use variable-size inputs, you can pad your inputs to the shape used for compilation as a workaround. If you want the batch size to be dynamic, you can pass --dynamic-batch-size to enable dynamic batching, which means that you will be able to use inputs with difference batch size during inference, but it comes with a potential tradeoff in terms of latency.

Exporting a checkpoint can be done as follows:

Copied

You should see the following logs which validate the model on Neuron deivces by comparing with PyTorch model on CPU:

Copied

This exports a neuron-compiled TorchScript module of the checkpoint defined by the --model argument.

As you can see, the task was automatically detected. This was possible because the model was on the Hub. For local models, providing the --task argument is needed or it will default to the model architecture without any task specific head:

Copied

Note that providing the --task argument for a model on the Hub will disable the automatic task detection. The resulting model.neuron file, can then be loaded and run on Neuron devices.

Exporting a model to Neuron via NeuronModel

You will also be able to export your models to Neuron format with optimum.neuron.NeuronModelForXXX model classes. Here is an example:

Copied

And the exported model can be used for inference directly with the NeuronModelForXXX class:

Copied

Exporting Stable Diffusion to Neuron

With the Optimum CLI you can compile components in the Stable Diffusion pipeline to gain acceleration on neuron devices during the inference.

So far, we support the export of following components in the pipeline:

CLIP text encoder

U-Net

VAE encoder

VAE decoder

“These blocks are chosen because they represent the bulk of the compute in the pipeline, and performance benchmarking has shown that running them on Neuron yields significant performance benefit.”

Besides, don’t hesitate to tweak the compilation configuration to find the best tradeoff between performance v.s accuracy in your use case. By default, we suggest casting FP32 matrix multiplication operations to BF16 which offers good performance with moderate sacrifice of the accuracy. Check out the guide from AWS Neuron documentation to better understand the options for your compilation.

Exporting a stable diffusion checkpoint can be done using the CLI:

Copied

Exporting Stable Diffusion XL to Neuron

Similar to Stable Diffusion, you will be able to use Optimum CLI to compile components in the SDXL pipeline for inference on neuron devices.

We support the export of following components in the pipeline to boost the speed:

Text encoder

Second text encoder

U-Net (a three times larger UNet than the one in Stable Diffusion pipeline)

VAE encoder

VAE decoder

“Stable Diffusion XL works especially well with images between 768 and 1024.”

Exporting a SDXL checkpoint can be done using the CLI:

Copied

Selecting a task

Specifying a --task should not be necessary in most cases when exporting from a model on the BOINC AI Hub.

However, in case you need to check for a given a model architecture what tasks the Neuron export supports, we got you covered. First, you can check the list of supported tasks here.

For each model architecture, you can find the list of supported tasks via the ~exporters.tasks.TasksManager. For example, for DistilBERT, for the Neuron export, we have:

Copied

You can then pass one of these tasks to the --task argument in the optimum-cli export neuron command, as mentioned above.

Last updated