Depth estimation

Depth estimation

Depth estimation datasets are used to train a model to approximate the relative distance of every pixel in an image from the camera, also known as depth. The applications enabled by these datasets primarily lie in areas like visual machine perception and perception in robotics. Example applications include mapping streets for self-driving cars. This guide will show you how to apply transformations to a depth estimation dataset.

Before you start, make sure you have up-to-date versions of albumentations installed:

Copied

pip install -U albumentations Albumentations is a Python library for performing data augmentation for computer vision. It supports various computer vision tasks such as image classification, object detection, segmentation, and keypoint estimation.

This guide uses the NYU Depth V2 dataset which is comprised of video sequences from various indoor scenes, recorded by RGB and depth cameras. The dataset consists of scenes from 3 cities and provides images along with their depth maps as labels.

Load the train split of the dataset and take a look at an example:

Copied

>>> from datasets import load_dataset

>>> train_dataset = load_dataset("sayakpaul/nyu_depth_v2", split="train")

>>> index = 17

>>> example = train_dataset[index]

>>> example

{'image': <PIL.PngImagePlugin.PngImageFile image mode=RGB size=640x480>,

'depth_map': <PIL.TiffImagePlugin.TiffImageFile image mode=F size=640x480>}The dataset has two fields:

image: a PIL PNG image object withuint8data type.depth_map: a PIL Tiff image object withfloat32data type which is the depth map of the image.

It is mention-worthy that JPEG/PNG format can only store uint8 or uint16 data. As the depth map is float32 data, it can’t be stored using PNG/JPEG. However, we can save the depth map using TIFF format as it supports a wider range of data types, including float32 data.

Next, check out an image with:

Copied

Before we look at the depth map, we need to first convert its data type to uint8 using .convert('RGB') as PIL can’t display float32 images. Now take a look at its corresponding depth map:

Copied

It’s all black! You’ll need to add some color to the depth map to visualize it properly. To do that, either we can apply color automatically during display using plt.imshow() or create a colored depth map using plt.cm and then display it. In this example, we have used the latter one, as we can save/write the colored depth map later. (the utility below is taken from the FastDepth repository).

Copied

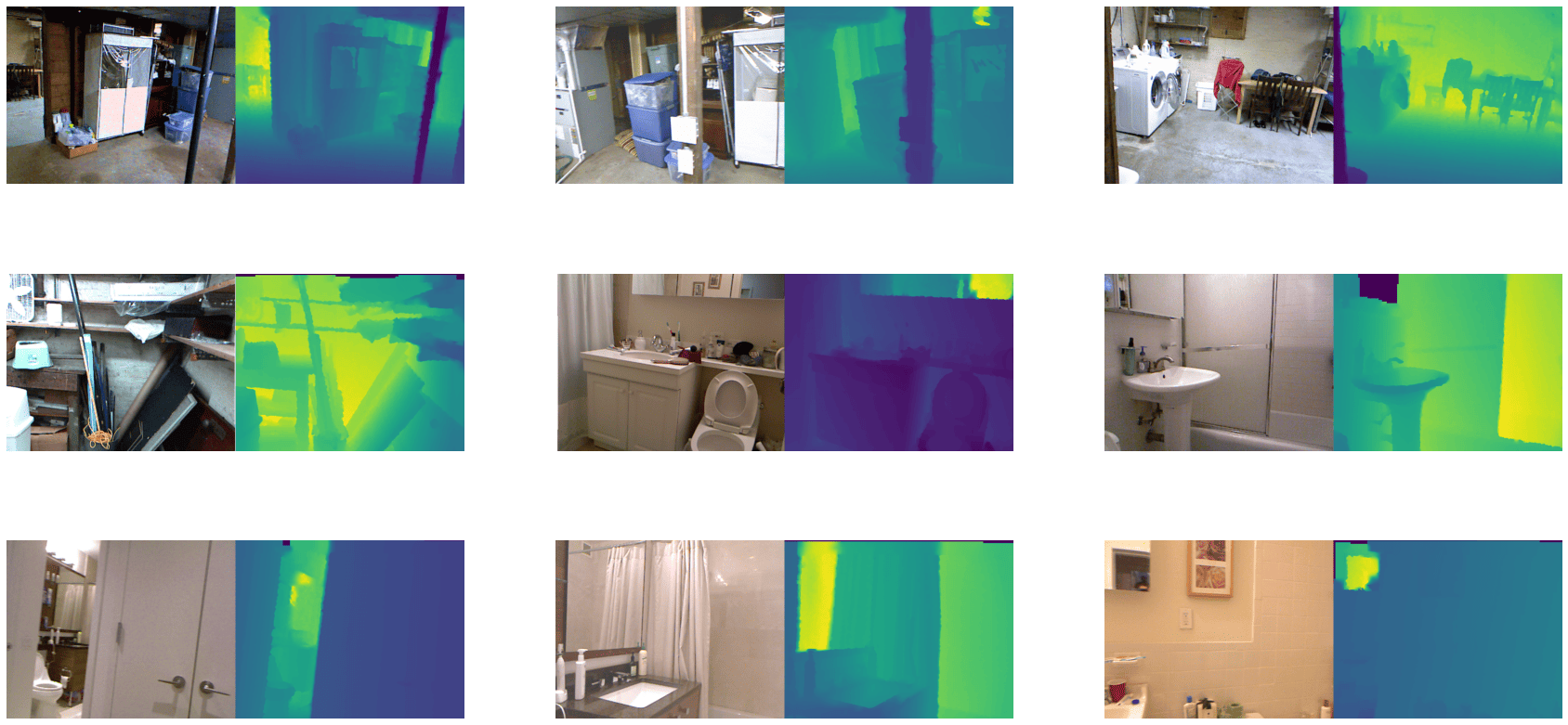

You can also visualize several different images and their corresponding depth maps.

Copied

Now apply some augmentations with albumentations. The augmentation transformations include:

Random horizontal flipping

Random cropping

Random brightness and contrast

Random gamma correction

Random hue saturation

Copied

Additionally, define a mapping to better reflect the target key name.

Copied

With additional_targets defined, you can pass the target depth maps to the depth argument of aug instead of mask. You’ll notice this change in the apply_transforms() function defined below.

Create a function to apply the transformation to the images as well as their depth maps:

Copied

Use the set_transform() function to apply the transformation on-the-fly to batches of the dataset to consume less disk space:

Copied

You can verify the transformation worked by indexing into the pixel_values and labels of an example image:

Copied

Visualize the same transformation on the image’s corresponding depth map:

Copied

You can also visualize multiple training samples reusing the previous random_indices:

Copied

Last updated