Semantic similarity with LoRA

Low-Rank Adaptation (LoRA) is a reparametrization method that aims to reduce the number of trainable parameters with low-rank representations. The weight matrix is broken down into low-rank matrices that are trained and updated. All the pretrained model parameters remain frozen. After training, the low-rank matrices are added back to the original weights. This makes it more efficient to store and train a LoRA model because there are significantly fewer parameters.

💡 Read LoRA: Low-Rank Adaptation of Large Language Models to learn more about LoRA.

In this guide, we’ll be using a LoRA script to fine-tune a intfloat/e5-large-v2 model on the smangrul/amazon_esci dataset for semantic similarity tasks. Feel free to explore the script to learn how things work in greater detail!

Setup

Start by installing 🌍 PEFT from source, and then navigate to the directory containing the training scripts for fine-tuning DreamBooth with LoRA:

Copied

cd peft/examples/feature_extractionInstall all the necessary required libraries with:

Copied

pip install -r requirements.txtNext, import all the necessary libraries:

🌍 Transformers for loading the

intfloat/e5-large-v2model and tokenizer🌍 Accelerate for the training loop

🌍 Datasets for loading and preparing the

smangrul/amazon_escidataset for training and inference🌍 Evaluate for evaluating the model’s performance

🌍 PEFT for setting up the LoRA configuration and creating the PEFT model

🌍 boincai_hub for uploading the trained model to HF hub

hnswlib for creating the search index and doing fast approximate nearest neighbor search

It is assumed that PyTorch with CUDA support is already installed.

Train

Launch the training script with accelerate launch and pass your hyperparameters along with the --use_peft argument to enable LoRA.

This guide uses the following LoraConfig:

Copied

Here’s what a full set of script arguments may look like when running in Colab on a V100 GPU with standard RAM:

Copied

Dataset for semantic similarity

The dataset we’ll be using is a small subset of the esci-data dataset (it can be found on Hub at smangrul/amazon_esci). Each sample contains a tuple of (query, product_title, relevance_label) where relevance_label is 1 if the product matches the intent of the query, otherwise it is 0.

Our task is to build an embedding model that can retrieve semantically similar products given a product query. This is usually the first stage in building a product search engine to retrieve all the potentially relevant products of a given query. Typically, this involves using Bi-Encoder models to cross-join the query and millions of products which could blow up quickly. Instead, you can use a Transformer model to retrieve the top K nearest similar products for a given query by embedding the query and products in the same latent embedding space. The millions of products are embedded offline to create a search index. At run time, only the query is embedded by the model, and products are retrieved from the search index with a fast approximate nearest neighbor search library such as FAISS or HNSWlib.

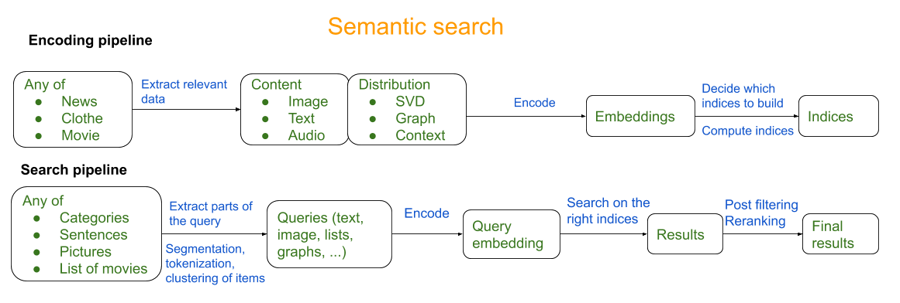

The next stage involves reranking the retrieved list of products to return the most relevant ones; this stage can utilize cross-encoder based models as the cross-join between the query and a limited set of retrieved products. The diagram below from awesome-semantic-search outlines a rough semantic search pipeline:

For this task guide, we will explore the first stage of training an embedding model to predict semantically similar products given a product query.

Training script deep dive

We finetune e5-large-v2 which tops the MTEB benchmark using PEFT-LoRA.

AutoModelForSentenceEmbedding returns the query and product embeddings, and the mean_pooling function pools them across the sequence dimension and normalizes them:

Copied

The get_cosine_embeddings function computes the cosine similarity and the get_loss function computes the loss. The loss enables the model to learn that a cosine score of 1 for query and product pairs is relevant, and a cosine score of 0 or below is irrelevant.

Define the PeftConfig with your LoRA hyperparameters, and create a PeftModel. We use 🌍Accelerate for handling all device management, mixed precision training, gradient accumulation, WandB tracking, and saving/loading utilities.

Results

The table below compares the training time, the batch size that could be fit in Colab, and the best ROC-AUC scores between a PEFT model and a fully fine-tuned model:

Pre-Trained e5-large-v2

-

-

0.68

PEFT

1.73

64

0.787

Full Fine-Tuning

2.33

32

0.7969

The PEFT-LoRA model trains 1.35X faster and can fit 2X batch size compared to the fully fine-tuned model, and the performance of PEFT-LoRA is comparable to the fully fine-tuned model with a relative drop of -1.24% in ROC-AUC. This gap can probably be closed with bigger models as mentioned in The Power of Scale for Parameter-Efficient Prompt Tuning .

Inference

Let’s go! Now we have the model, we need to create a search index of all the products in our catalog. Please refer to peft_lora_embedding_semantic_similarity_inference.ipynb for the complete inference code.

Get a list of ids to products which we can call

ids_to_products_dict:

Copied

Use the trained smangrul/peft_lora_e5_ecommerce_semantic_search_colab model to get the product embeddings:

Copied

Create a search index using HNSWlib:

Copied

Get the query embeddings and nearest neighbors:

Copied

Let’s test it out with the query

deep learning books:

Copied

Output:

Copied

Books on deep learning and machine learning are retrieved even though machine learning wasn’t included in the query. This means the model has learned that these books are semantically relevant to the query based on the purchase behavior of customers on Amazon.

The next steps would ideally involve using ONNX/TensorRT to optimize the model and using a Triton server to host it. Check out 🌍 Optimum for related optimizations for efficient serving!

Last updated